Introduction

One of the highlights of my career has been the opportunity to serve as a CISO, designing, managing and growing a successful security program. Through this series of posts, I’m sharing some of the things I’ve learned along the way.

In Part 1 of this series, I gave a brief background on how I became a CISO and outlined the process of defining the security program’s purpose and mission statement. In Part 2, I talked about scoping and organizing the program. In this third installment, I’m going to kick off what will be a multi-part series about how to measure the security program.

Today I’ll introduce the concept of measuring Capability and why it’s important. I’ll follow that in the next post with a walkthrough of measuring pPrformance (outcomes) and how to communicate these metrics/measures to multiple stakeholders, including the Board.

Measuring what matters

If you can’t measure it, you can’t improve it. - Peter Drucker

I don’t think anyone would argue against the need for security program metrics, but I also don’t think we always do a good job of measuring the right things.

Let’s take a function like vulnerability management. One common metric is time-to-patch. Let’s say your data indicates that 100% of all endpoints were fully patched within 15 days last month. Seems pretty good right? But how confident are you that you’re seeing all of the endpoints to begin with? What if it turns out that the universe of endpoints you’re currently monitoring only represents 70% of what’s actually operating in your environment? And for the other 30%, you don’t have any reliable method to monitor or centrally manage updates. All of a sudden that metric isn’t looking too good and, what’s worse, may have been giving a false sense of security.

If you’re only looking at performance-based metrics (like time-to-patch) without also measuring and challenging assumptions about your underlying capabilities, you’re only telling half of the story and you risk misrepresenting the effectiveness of your program.

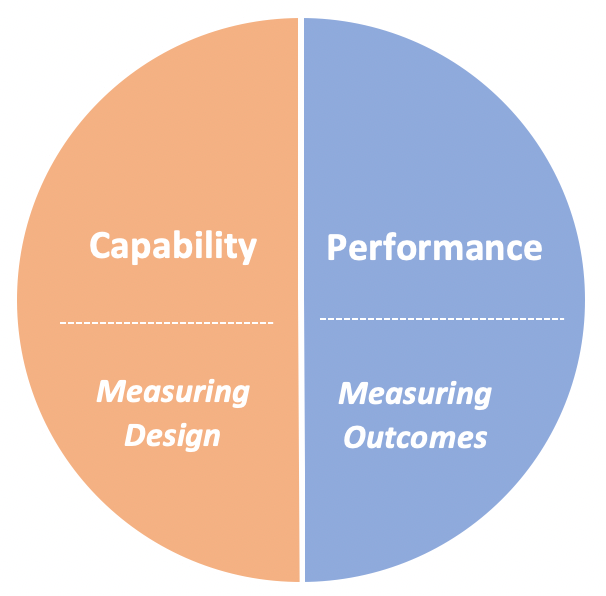

As such, I propose that an effective metrics program must measure both Capability and Performance.

Capability vs. Performance

Let me further define what I mean by Capability and Performance measures:

- Capability Measures - Is the program aligned with best practice and capable of achieving its defined goals?

- Performance Measures - How well is the program actually doing (i.e. outcomes)

Capability measures speak to the potential to achieve the desired results (i.e. how well the program is designed), while Performance measures speak to the actual outcomes. Capability measures help validate your program design and instill confidence in your performance measures. They can also uncover gaps in your program that might otherwise go undetected. Together, capability and performance measures give a more complete picture of the overall state of your program.

Measuring Capability

While it’s true that part of measuring capability can include using industry-standard maturity models (e.g. CMMI), I propose there’s more to it than that. While Maturity is one important measure, I would assert that there are two other critical capability measures that that every program should track: Visibility and Influence.

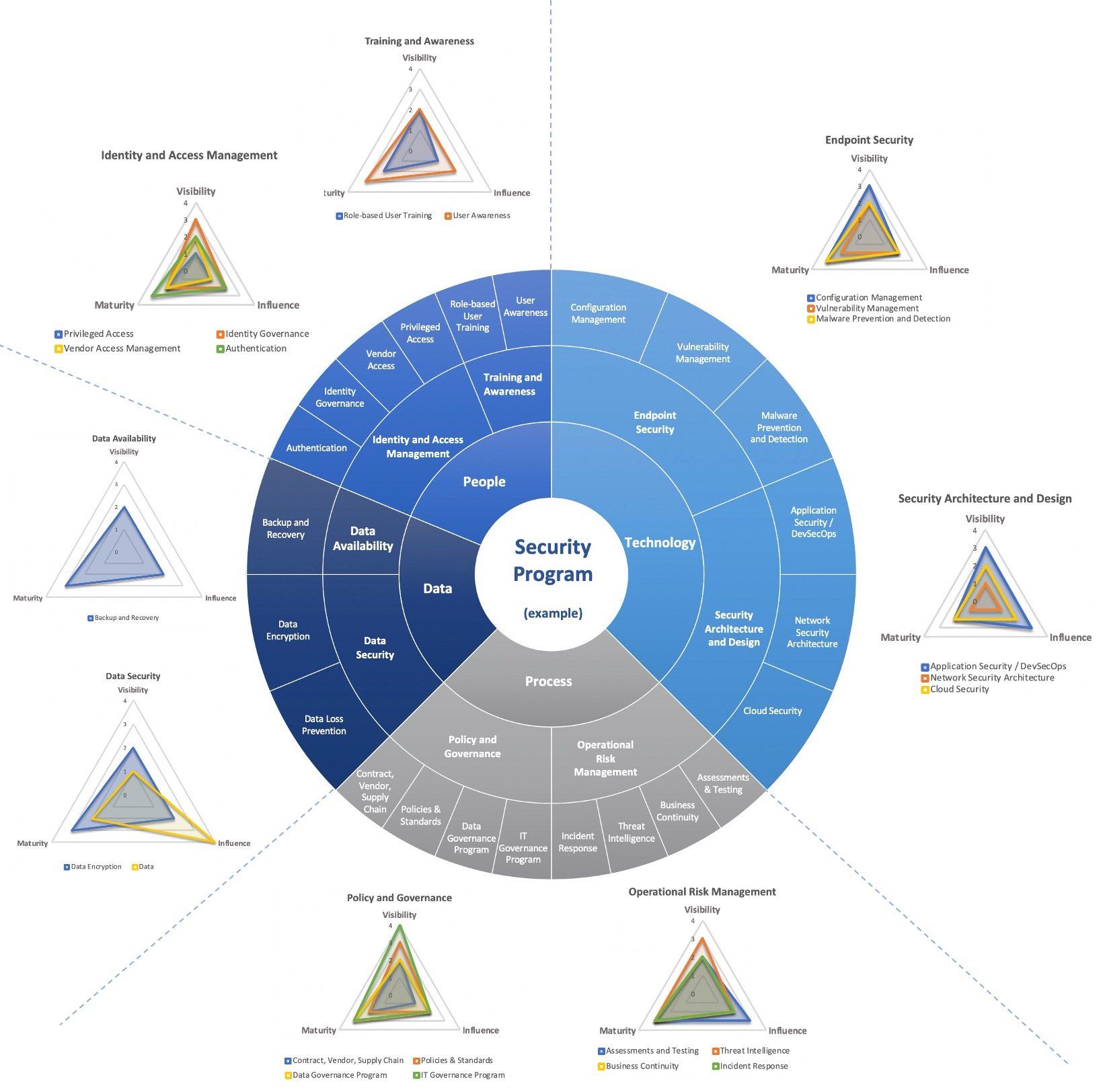

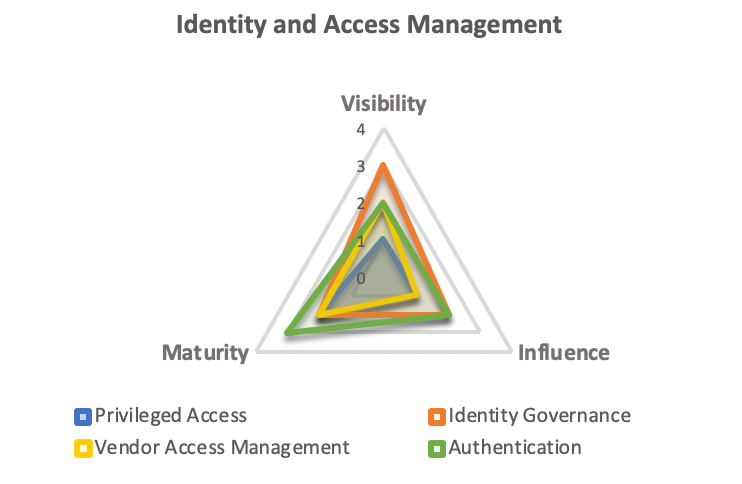

Further, I believe we should measure Visibility, Influence, and Maturity for each component of our security program (which would equate to everything represented on the outer ring of this notional security program visual from part 2 of this series).

I’ll explain what I mean by Visibility and Influence in more detail, but the overall concept of Measuring Capability is really quite straightforward:

- Visibility: are we seeing what we need to see?

- Influence: can we effect change everywhere we need to?

- Maturity: how well do we align with best practice?

Again our goal is to measure Visibility, Influence and Maturity for each component of the security program and I’ll use Training and Awareness as a very simple example of how to do that.

| Program Component | Purpose / Scope |

|---|---|

| Training and Awareness | Ensure all members of the workforce receive timely and relevant security training and continuous engagement in order to minimize the occurence of preventable incidents |

In order to measure Visibility and Influence for each component of our program, we’ll need to identify what I call the "target population" – what (or who) will be the focus (or target) of our activities. Every component of the security program should have a target population which typically aligns with your defined scope. For vulnerability management, the target population might be all endpoints operating in your environment. For data loss prevention, it might be all sensitive data. For disaster recovery, all business-critical systems. In the case of our example (Training and Awareness) it will be “all members of the workforce”.

We’re now going to use that target population to measure Visibility and Influence.

Measuring Visibility

If you can’t see something, there’s a good chance you can’t do anything about it. When it comes to a desired outcome like risk reduction, we don’t want to make assumptions about the comprehensiveness of our security control coverage. By measuring Visibility for each component of our security program, we can gain some level of confidence that we’re applying our efforts to the entire scope of the things that we should be.

In order to consistently measure Visibility across the entire security program, let’s first establish a standard rating scale:

| Rating | Visibility Definition |

|---|---|

| None (0) | There is no visibility. The true scope is unknown and there is no method to determine changes to the target population. |

| Low (1) | Our ability to identify, measure, and monitor the target population is decentralized, ad-hoc, or on-demand. As a result, there is doubt that we have a full view of the population or that we are keeping up with changes to that population. |

| Moderate (2) | There is a centralized, standardized method for measuring and monitoring, but there is not confidence that it provides complete visibility. Real-time insight into changes to the environment are limited or non-existent. |

| High (3) | There is a centralized and standardized method to measure and, while it is believed there is complete visibility, monitoring for changes is still largely on-demand, manual, or otherwise infrequent, so those changes may go unnoticed for some period of time. |

| Optimized (4) | There is a centralized and standardized method to measure the target population, with real time monitoring to account for any changes and ensure complete visibility. |

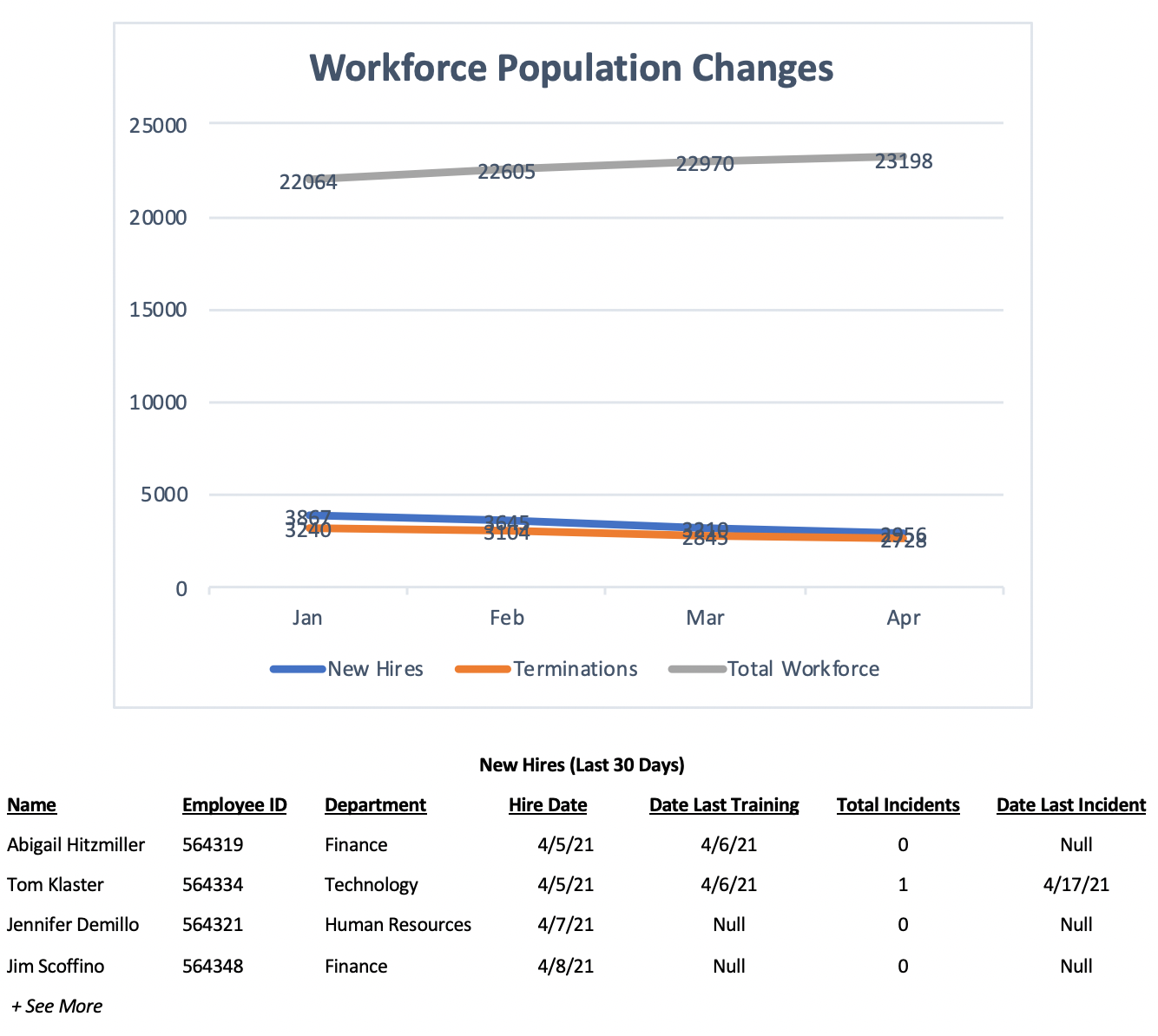

Next we need to determine which metrics to collect in order to effectively rate ourselves 0-4 for Training and Awareness (keeping in mind the purpose and target population of “All members of the workforce”).

Let’s say in our example we’ve identified a need to measure the following:

- Any changes to the target population (new hires, terminations)

- Training status for every member of the workforce

- Phishing incident data for every member of the workforce

Let’s also assume for purposes of this example that we get a daily feed from HR of all new hires and terminations. In addition, we receive a feed of daily training module compliance data for every user in the organization so we also have a means to assess current training status for every member of the workforce. Our incident response data is also updated real-time and correlated with workforce data so we also have the ability to link incident occurrences to each member of the workforce. We feed all of this data to our centralized SIEM, giving us on-demand, near-real-time visibility so we can be assured that we always have the latest training and incident data for any user in the organization.

Based on the rating criteria for Visibility, we might rate ourselves at 4 (Optimized) for this component of our program. As such, we should have a fairly high level of confidence that we can maintain continued visibility of our target population and that any future performance measures we use to demonstrate the effectiveness of our training and awareness efforts are representative of the entire workforce.

Measuring Influence

We may have really good visibility of our target population but just because we can see something, doesn’t mean we can do anything about it. We can scan for vulnerabilities but can we also install patches? We can detect configuration drift, but can we fix it too? We need to be able to demonstrate with confidence the extent to which our security program is going to be able to have the intended impact on the target population. This is why it’s equally important that we also measure “Influence”.

Similar to Visibility, we’ll leverage a common rating scale for measuring Influence:

| Rating | Definition |

|---|---|

| None (0) | We have no influence. All efforts are wasted. |

| Low (1) | We are only able to influence a small portion of the target population, leaving the majority unaffected by this security program activity. |

| Moderate (2) | We can influence much of the population, though a portion remains unaffected by this security program activity. |

| High (3) | We can influence nearly the entire population and have ad-hoc mechanisms in place for identifying outliers and incorporating them into our scope. |

| Optimized (4) | We are confident that the entire target population is affected by this activity and any outliers are immediately identified and targeted through automation. |

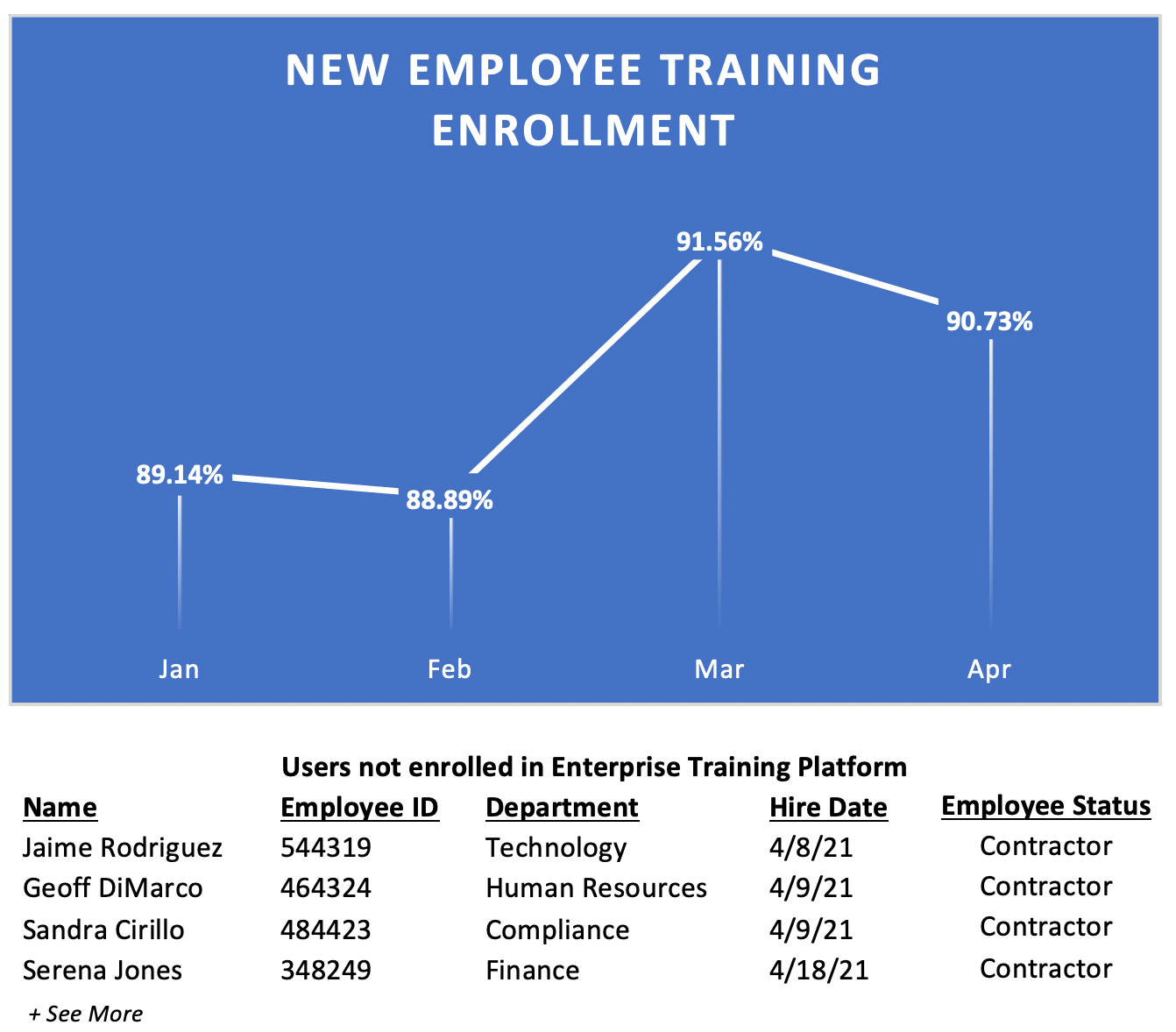

Continuing with our example, let’s assume our ability to Influence the target population (all members of the workforce) hinges on our ability to deliver and enforce the completion of security awareness training. For simplicity’s sake let’s also assume that we rely on a single enterprise training platform as the only method to enroll users in mandatory security training classes. We will therefore simply choose to measure Influence as the:

- Percentage of workforce registered in enterprise training platform

Fortunately, we are also able to get this enterprise training user registration data as a daily feed into our SIEM.

However, as we’re validating our ability to Influence the target population by comparing the user registration data for our training platform to our daily HR feeds, we learn that contracted staff (which account for about 10% of the workforce) cannot be registered in the training platform and therefore cannot be assigned mandatory training.

While we’ve established that we have 100% Visibility of that population, our potential Influence is limited to 90%. Given the standard rating criteria for Influence, we may choose to conservatively rate ourselves as Moderate (2) until such time that we can fix the issue or identify an alternative method to deliver training to those staff.

Had we not taken the time to assess our level of Influence, we may have missed this gap in our Capability and incorrectly assumed the Performance measures we collect were representative of the entire workforce.

Notice that I didn’t mention anything about analyzing the actual training results, the impact our training activities were having on incident trends, or any other measures that might indicate whether we’re achieving the goals of our program. While certainly important, those are Performance (not Capability) measures and we’ll get into those in the next post of this series.

Measuring Maturity

So far we’ve covered two of the three Capability measures - Visibility and Influence. The last Capability measure involves assessing our alignment with best practices in order to demonstrate whether we’re doing all of the things we should be doing in our security program.

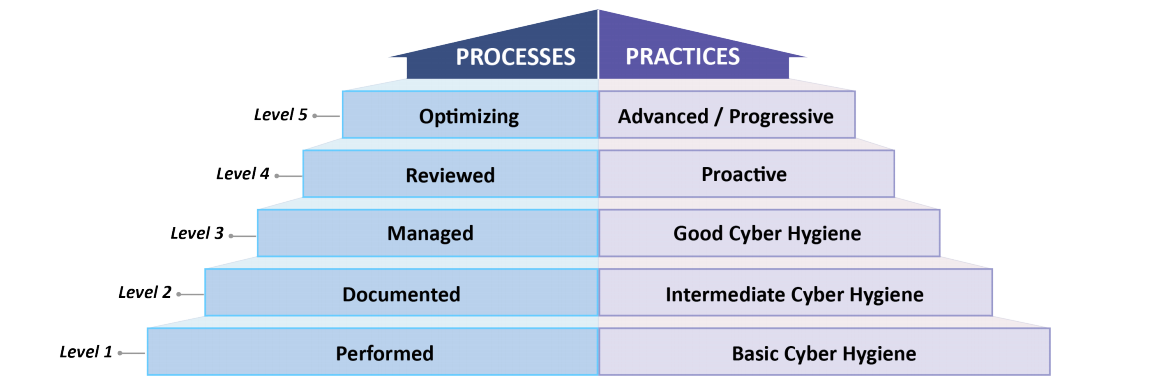

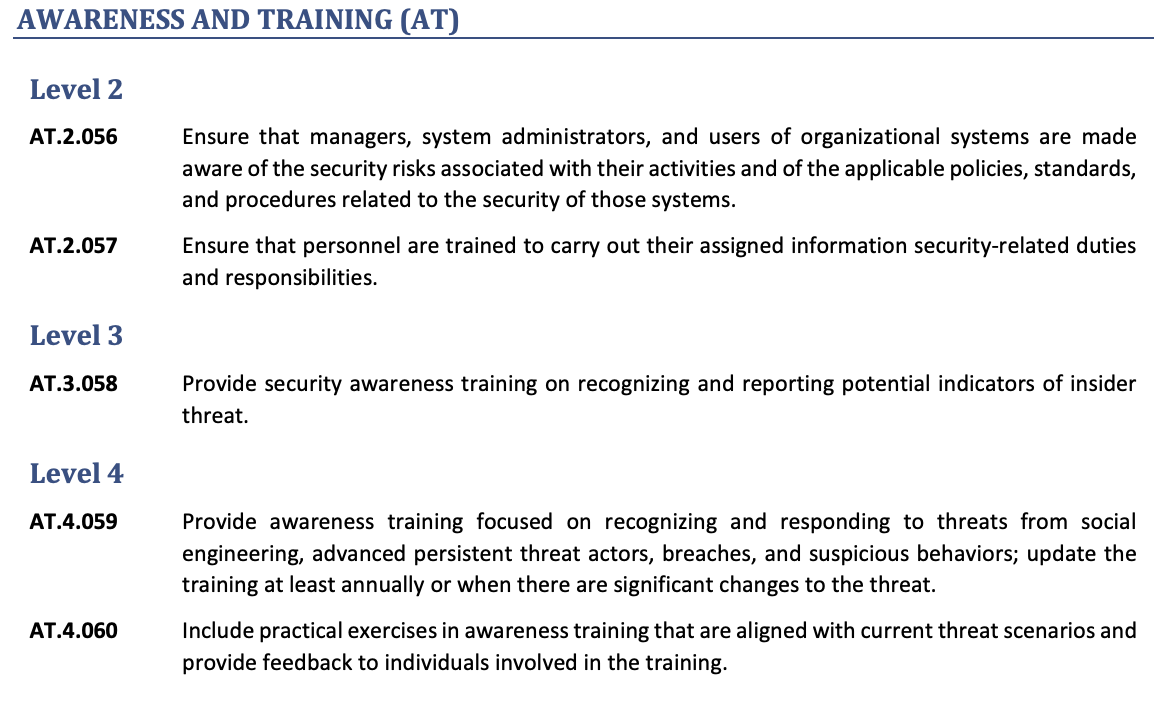

While measuring Visibility and Influence may be a new concept to some, maturity models have been around for some time and I would recommend leveraging an existing maturity model/framework, of which there are several. They’re certainly not all great but one that I would recommend at least considering is – The Cybersecurity Maturity Model. The CMM model covers activities within 14 different domains (from Access Control to Physical Protection) and outlines recommended best-practice activities at 5 different levels of maturity (Performed through Optimizing).

Since CMM is also a five-level model you can fairly easily map the ratings to the same scale we used to measure Visibility and Influence (0-4). Here is a snapshot of the Training and Awareness section:

As you’re assessing your alignment to the Processes and Practices of a model like CMM, be sure to produce demonstrative evidence of your maturity rating. If Level 4 requires specific training topics or that your training content be updated at least annually, you should document evidence of how you do that. This not only forces you to validate your program activities, but it can also help prepare for future audits. Also, you should always “round down” when rating your program against the maturity criteria. If a given maturity level says you should be doing three things and you’re only doing two of them, you haven’t achieved that maturity level yet. However, filling that gap becomes a tangible and justifiable goal that you would then incorporate into your work plan to demonstrate how you’re continuously maturing your program.

Many of these maturity models aren’t perfect or even complete, so you may need to adapt them to fit your specific Security Program scope. Even so, I would still recommend demonstrating alignment with an industry best practice whenever possible rather than trying to create your own maturity benchmark from scratch.

Putting the Capability Measures together

With Visibility, Influence, and Maturity measures we can clearly demonstrate how well we align with best practices and instill confidence that we have designed our security program to be successful within our specific environment by ensuring continued visibility and the ability to effect change where needed.

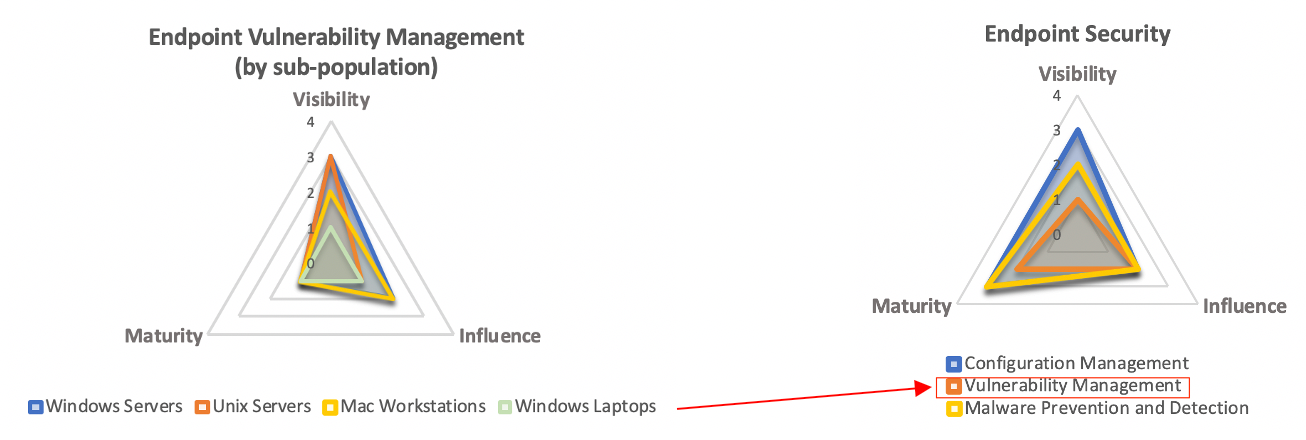

These capability measures are easy to visualize …

… and by having these common definitions and rating scales for Visibility, Influence, and Maturity, we can standardize our measures across every component of our security program which will help identify where we may need to focus improvement efforts.

Remember, capability measures are only half of the story and in the next post I’ll show you how to combine these with performance (outcome) measures to give a more complete picture of the state of your program.

A few other thoughts

Scope and target population may dictate the complexity of your capability measures

The very basic example of Training and Awareness that I used involved a narrow scope and a simplified target population (“all members of the workforce”). But what happens if our target population isn’t so simple?

Consider endpoint vulnerability management where the scope might be defined “all devices connected to the network”. That could include servers, desktops, laptops, mobile devices of varying operating systems – each managed by different IT teams, using different asset management technologies. And unfortunately that means we’ll have to collect measures from multiple sources and may have varying degrees of Visibility, Influence and Maturity between endpoint types. In these situations, I recommend dividing your target population into relevant “sub-populations”, collecting Capability measures for each, and assigning them the appropriate Capability rating based on those measures.

For example, let’s say your environment has exactly 20,000 endpoints broken up as follows:

| Endpoint Type | Quantity | Visibility Rating |

|---|---|---|

| Windows Servers | 5,000 | 3 |

| Unix Servers | 2,500 | 3 |

| Windows Laptops | 10,000 | 1 |

| Mac Laptops | 2,500 | 2 |

You can use a weighted average (perhaps based on their respective size) to come up with the overall Visibility score that represents all of the various endpoint types.

- Overall visibility rating: ((3 x .25) + (3 x .125) + (0 x .5) + (1 x .125)) = 1.25 = 1 (rounded down)

You can do the same for Influence and Maturity and use these averages to derive your overall Capability ratings for Vulnerability Management as a whole. This allows you to report on the higher-level summary data but still retain the details in case you have to explain the ratings or how you intend to prioritize improvement efforts (in this case, perhaps focusing on Windows laptops first since they make up a significant portion of the endpoint environment).

Depending on the population or program component that you're measuring, you may decide to give different weights to each sub-population based on something other than size ratio (risk, importance, etc). Either way, breaking these out into sub-populations allows you to more accurately measure and more clearly identify gaps and areas of improvement.

Measuring maturity by benchmarking against peers

I’ve heard peer benchmarking touted as a means of assessing program maturity. I’ll play devil’s advocate by saying this isn’t a competition so who cares what your peers are doing? Would you only start thinking about business continuity or identity and access management if your peers were doing it? And if they’re not, does that mean you shouldn’t either? While most of us face similar risks, not every organization has the same priorities or faces the same challenges (even if they are in the same industry), so comparing your program to another organization may not be relevant anyway. And while there’s nothing wrong with aligning with best practices and maturity models, it shouldn’t matter how well other organizations implement them – just how well you do.

I’m taking a bit of an extreme position here to make a point, but if you really want to benchmark your program against your peers, you should be doing it for the right reasons. Perhaps you want to identify areas that you missed. Perhaps you need the benchmark to help justify funding for a particular project (not a great reason but unfortunately sometimes necessary). These may be good reasons to leverage peer benchmarking, but I would strongly caution against using it as a primary driver for investments or a means to justify the adequacy of your program design.

At the end of the day, you should striving for a particular program maturity level because that’s what’s required in your organization to adequately mitigate risk or better enable the business. Let your metrics, risk model, and organizational priorities (not what others are doing) be the primary drivers of where you focus your efforts.

Recap

Programs should be assessed based on two types of measures:

- Capability Measures - Is the program aligned with best practice and is it capable of achieving its defined goals?

- Performance Measures - How well is the program actually doing (e.g. outcomes)?

Capability and performance measures should be tracked for each component of your security program.

There are three fundamental Capability Measures:

- Visibility - are we seeing the things we should be seeing?

- Influence - can we effect change everywhere we need to?

- Maturity - to what degree does the program align with best practices?

Some of the benefits of Capability measures include:

- They can identify gaps in the security program that performance measures may miss

- By validating program design and coverage, they instill greater confidence in performance measures

- They demonstrate the degree to which the security program aligns with best practice

- Concepts like Visibility, Influence, and Maturity aren’t heavily rooted in security- or technology-centric concepts and are easy to explain to executives and non-technical stakeholders

What’s Next?

I focused this post on how and why to measure Capability, but as I said, that is only half of the equation. In the next post I will delve into performance measures and the importance of measuring outcomes to demonstrate the impact the security program is having. I’ll also give some tips on how to use these metrics to prioritize initiatives and communicate them to executive stakeholders.

So stay tuned for the next post but in the interim, I enjoy connecting with and learning from others, so reach out to me on LinkedIn and Twitter.